Author: Vadim Belski, Head of AI, Principal Architect at ScienceSoft

Even with strong AI/ML, orchestrating claim investigation still leans heavily on people. Agentic AI offers something insurers haven’t had before: autonomous, adaptive automation that mirrors the end-to-end workflow of a human investigation team.

Why Traditional Claims AI Still Hits Bottlenecks

Most US carriers already have some traditional AI/ML in their stack to reliably score claims and send the riskiest ones to SIU. But despite impressive progress over rule-based tools, AI-enabled fraud detection systems remain largely reactive and manual. A typical high-risk case still demands hours, if not days, of manual orchestration — cross-checking and fusing scores across models, contacting claimants, scanning call notes, and compiling summaries for investigators. That human “glue” throttles throughput and extends fraud detection cycle time. ScienceSoft’s AI consultants believe that traditional AI/ML is effective as fraud decision support, but because it doesn’t act, the surrounding investigation friction persists.

There’s a risk angle, too. Shallowfakes, modified invoices, and entirely fabricated vendor reports now increasingly show up in claim submissions, an issue that big players like Allianz and Zurich have flagged publicly with the rise of generative AI. Conventional image analysis and natural language processing algorithms can’t effectively capture the nuances of deeply edited media, leaving fraud to thrive in blind spots. Adding a layer of human validation would bring insurers back to slow and inefficient manual claim reviews.

AI Agents as an Investigation Squad You Can Program

Agentic AI, at its core, directly targets the friction in disconnected claims fraud detection workflows. The simplest way to describe an agentic approach is to imagine a virtual, coordinated team of claims investigators. Each agent owns a narrow skill: one validates media and detects manipulation, another retrieves third-party data for evidence enrichment, a third runs network anomaly analysis, a fourth calls claimants to verify submissions, and a final agent compiles the findings into a SIU report. On top of these, there’s a supervisor agent that triages and assigns agents’ tasks, handles dependencies, and logs agentic activity for auditability.

Just like human fraud specialists, AI agents can query relevant data sources for contextual insights and trigger automation across claim investigation tools, all without perpetual manual instructing and oversight. Instead of multiple risk scores produced in disparate pipelines, the insurer gets an explainable brief of findings across all investigation streams, with next-best actions proposed based on a holistic claim assessment. Human investigators retain authority to escalate, clear, deny, or manually instruct agents to enforce viable steps.

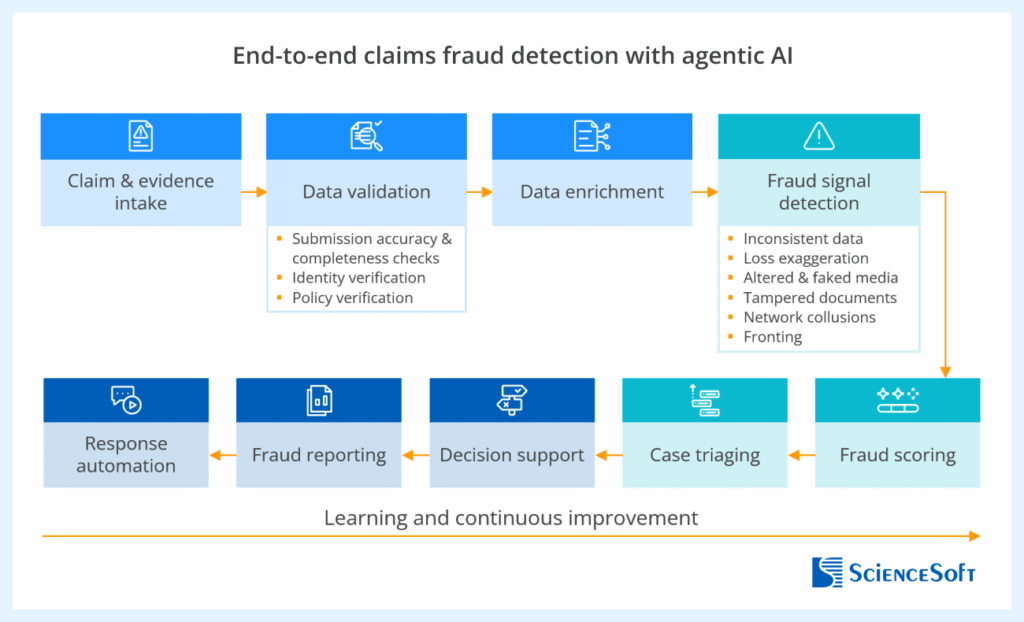

The blueprint assembled by the team of ScienceSoft’s AI architects shows an end-to-end agentic investigation workflow and highlights key activities performed by specialized agents:

How Insurers Benefit From Agentic Fraud Detection

Based on insights from early adopters and ScienceSoft’s practice, the benefits of agentic AI for claims fraud detection fall into four major categories:

Faster, earlier fraud capture. Specialized agents run evidence gathering, validation, and pattern checks across various types of fraud in parallel, collapsing days of manual review into minutes. Carriers can surface suspicious claims faster and prevent fraudulent payouts well before they reach settlement.

Improved fraud detection accuracy. By reasoning across multiple investigation streams simultaneously, agentic AI gains comprehensive context for sharper fraud scoring. The result is more precise fraud alerts, faster settlement of clean claims, and fewer customer complaints tied to wrongful investigations.

Higher investigator efficiency. Agents free investigators from the most tedious routines, such as pulling case records, assembling evidence packages, and summarizing investigation files. In production environments, this has driven as much as a 5x increase in SIU’s analytical capacity without added headcount.

Fairer payouts, reduced losses. By blocking illegitimate claims early, agentic AI strengthens payout fairness and protects the insurer’s bottom line. Early adopters report fewer fraudulent settlements and reduced loss reserve leakage.

Build or Buy: What’s Practical

The market for prebuilt claims AI agents is still young in 2025. Mature fraud detection suites (e.g., from Shift Technology, FRISS, CLARA) offer strong AI analytical cores for document and media review, collusion detection, scoring, and case management. But none have so far marketed full, vertical agentic orchestration. To leverage such a capability, an insurer would require a custom agentic backbone tailored to its systems, data sources, and risk policies.

Yet, in most cases, insurers don’t need to start from scratch. Specialized frameworks like LangGraph and LangChain and services by the cloud providers (e.g., Azure AI Foundry by Microsoft Azure, Amazon Bedrock AgentCore by AWS) let firms quickly roll out proprietary AI agents for specific fraud detection tasks. Applying retrieval-augmented generation (RAG) is usually enough to anchor the agents on the insurer’s business context and proprietary data. When it comes to orchestration, most agent engineering frameworks offer built-in dedicated toolkits. If you already use an enterprise platform like Power Automate or Camunda, the path of least resistance would be to manage agent instructions and orchestrate agentic workflows using the platform’s low-code tools, just like you do for conventional business automation.

Reusable Architecture for an Agentic AI System

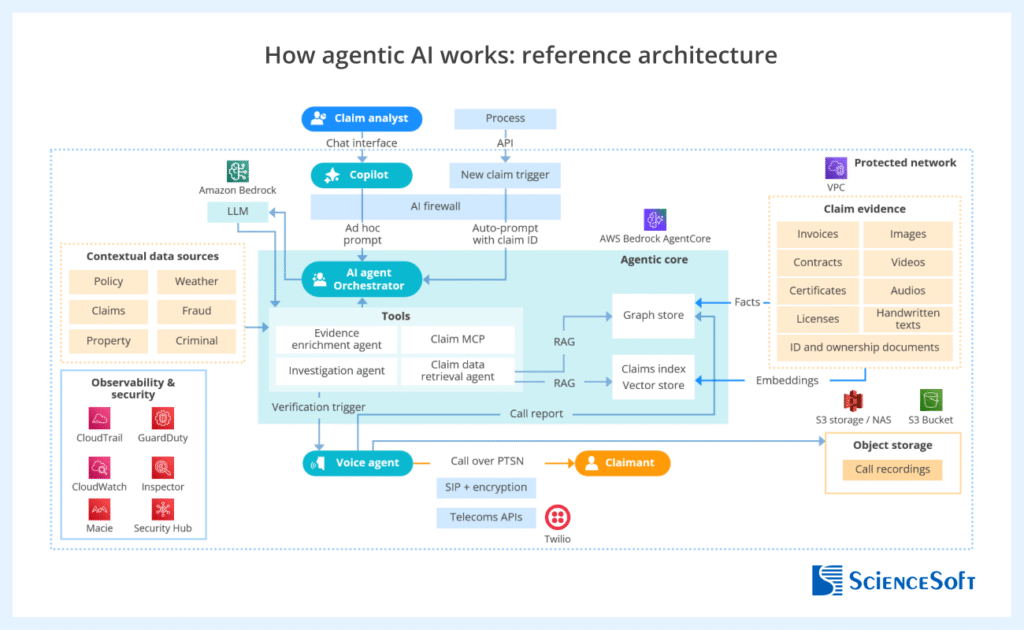

AI architects at ScienceSoft suggest that a pragmatic starting architecture for agentic claims fraud detection should include:

- Task-focused agents that handle specific fraud investigation activities, calling rule-based and AI-supported automation tools as allowed.

- A reasoning agent that synthesizes multi-agent investigation results and composes an auditable brief with recommended next actions for human investigators.

- A supervisory orchestrator coordinating modular agents.

- Integration with the insurer’s claim data sources to trigger investigation events at FNOL and key claim review milestones.

- Integration with the firm’s case management system to enable agent access to investigation policies and establish human governance and SIU feedback loops.

Expanding Oracle’s canonic architecture of an agentic fraud detection system for Guardian, ScienceSoft created a detailed technical design of a multi-agent AI system for claims fraud detection. The design can be adapted easily to each insurer’s distinct stack.

Agentic AI Guardrails for Security, Accuracy, and Compliance

ScienceSoft consultants recommend building the guardrails into a separate governance layer for streamlined and more auditable agent risk management operations.

Immutable audit trails. Agent communications, tool calls, and API interactions should be recorded with timestamps and rationales. Insurers need this capability to validate the logic behind agent outputs and reconstruct the decision path if a claim is disputed or appealed.

Evidence-based reasoning. Each agent’s conclusion must be traceable to original data sources to provide defendable decision evidence for regulators and policyholders.

Human-in-the-loop controls. Investigators must have visibility into AI findings and the ability to override or annotate them. This keeps decision authority within human control and preserves institutional knowledge for model refinement.

Defined automation boundaries. Claim denials, benefit reductions, and legal escalation should be automated with explicit human sign-off. This ensures accountability for final adjudication and protects against unauthorized AI-driven decisions.

Right-sized data exposure. Agents should only access the claim data necessary for their specific function, e.g., a media agent sees images, not full policy details. This step reduces PII exposure and addresses data minimization rules under privacy acts (CCPA, GDPR, etc.).

Fairness and bias checks. Regular tests for bias across claimant demographics, locations, and claim types prevent systemic bias, which could damage reputation and compliance.

A Focused First Step: Deploy One High-Leverage Agent

The safest strategy for insurers is to pick a repetitive, judgment-heavy fraud detection workflow that creates real drag on the investigation team and start by automating the orchestration around it. Realizing an early ROI from a narrow step helps foster the adoption of AI agents and secure management buy-in for broader agentic transformation. For most carriers, a viable area is evidence reconciliation and conversational verification of gaps.

ScienceSoft’s AI consulting and R&D team has recently designed a proof of concept (PoC) for a voice-based AI agent specifically to detect mismatches between claim narratives and high-volume supporting documents and conduct investigative dialogue with claimants to clarify inconsistent points. The solution can be deployed as part of a multi-agent environment or as a standalone tool connected to an insurer’s existing claims software stack.

In ScienceSoft’s internal runs, the solution enabled a 40%+ rise in investigators’ capacity, 80% quicker summarization of claimant interactions, and more than 20% higher fraud detection rates thanks to additional, sentiment-based fraud indicators. The same orchestration logic can be over time extended to other agents, building toward an end-to-end multi-agent setup.

ScienceSoft’s Voice AI Agent in Action

- When a claim is flagged for anomalies (e.g., mismatched invoices, conflicting FNOL data, reused photos), the orchestrator automatically dispatches the voice agent to call the claimant.

- The agent conducts a live, consented conversation with the claimant through an automated call interface. Instead of reading a static script, it generates context-specific questions on the fly: requesting missing data, clarifying event timelines, or probing for inconsistencies between statements and evidence.

- During the conversation, the agent analyzes linguistic cues that may indicate the claimant’s uncertainty or stress. These indicators serve as behavioral signals that enrich the risk profile.

- As the claimant answers, the agent cross-references information with claim data and third-party sources. For example, if the claimant mentions a repair shop or a hospital, the agent checks for prior fraud alerts tied to those entities.

- After the call, the agent compiles a short report: the questions asked, answers given, sentiment assessment, and any contradictions found. It then submits the report for review to the fraud investigation team.

The demo is available on request from ScienceSoft.